The Benefits of Teeth Whitening

2016.07.22

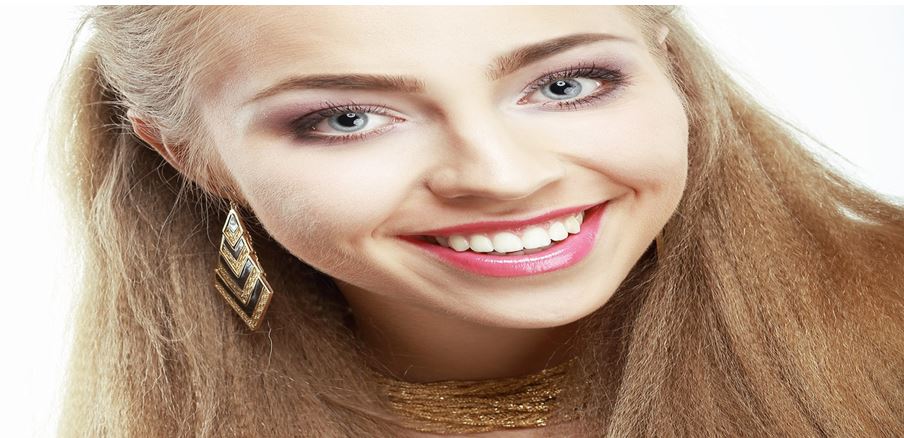

There is no denying it, as a culture we highly regard white teeth. Virtually everywhere you look, there is an advertisement or message about white teeth or how to get whiter teeth. Even toothpastes advertise their teeth whitening abilities. Dentists offer a variety of procedures to whiten patients' teeth including everything from whitening gels to lasers. White teeth, it seems, are a huge part of our modern standards for beauty.

With all of that said, what are the benefits of having whiter teeth? If you decide to work with a dentist to have one of these teeth whitening procedures done, how is it going to impact your day-to-day life? Here are a few of the advantages that patients see after they whiten their teeth.

However, while dental care is in part about health, it's also about cosmetics. There is a reason why dentistry and orthodontics focus so much on ensuring that kids have healthy and straight smiles. Having a beautiful smile is an important element in our culture, and it can impact a person's life in a variety of ways. Straight teeth aren't the only benchmark of a beautiful smile, either. The color of your teeth can also define how beautiful your smile is to other people and, by extension, to yourself. Yellow teeth aren't seen in the same flattering light. Having white teeth and a straight smile can help a person feel beautiful, comfortable, and confident in their own skin. As such, teeth whitening procedures can go a long way toward improving your self-esteem.

With all of that said, what are the benefits of having whiter teeth? If you decide to work with a dentist to have one of these teeth whitening procedures done, how is it going to impact your day-to-day life? Here are a few of the advantages that patients see after they whiten their teeth.

1. You Get a Boost of Self-Esteem

A big part of dentistry concerns health, both in terms of pursuing preventative treatments and resolving existing issues. Good oral health not only helps to keep your teeth healthy as you grow older but also helps to stave off other medical conditions. For instance, keeping up with your visits to the dentist also keeps your gums healthy. Many experts have suggested a possible link between gum disease and heart disease. As you can see, good dental hygiene is connected to good overall bodily health.However, while dental care is in part about health, it's also about cosmetics. There is a reason why dentistry and orthodontics focus so much on ensuring that kids have healthy and straight smiles. Having a beautiful smile is an important element in our culture, and it can impact a person's life in a variety of ways. Straight teeth aren't the only benchmark of a beautiful smile, either. The color of your teeth can also define how beautiful your smile is to other people and, by extension, to yourself. Yellow teeth aren't seen in the same flattering light. Having white teeth and a straight smile can help a person feel beautiful, comfortable, and confident in their own skin. As such, teeth whitening procedures can go a long way toward improving your self-esteem.

2. It Can Influence Your Personal Relationships

Self-esteem affects everything from how other people perceive you to how adventurous you are in your day-to-day life. For these reasons and more, having whiter teeth can have a strong, positive impact on the relationships that you share with other people. This statement applies to romantic relationships too, according to a study conducted by the American Academy of Cosmetic Dentistry, 99.7% of all Americans find a smile to be "an important social asset" and 96% say that a good smile "makes a person more appealing to members of the opposite sex."3. Whiter Teeth Can Impact Your Career Success

If a beautiful smile can affect the way you interact with people in social situations, you can bet that it will also have an impact on your professional relationships. In a job interview, for instance, charm and confidence tend to count almost as much as the way you answer the hiring manager's questions. The same American Academy of Cosmetic Dentistry study found that 74% of adults believe that an "unattractive smile" can hurt a person in his or her career. From getting hired to establishing good relationships with co-workers to interacting with customers and clients, there are many areas in which a great smile (and the confidence it brings) can help you in your career.Choosing the Teeth Whitening Strategy That Is Right for You

If you think that teeth whitening could improve your self-esteem and quality of life, it's time to start looking around for the right whitening strategy for you. There are over-the-counter teeth whitening products that you can buy but, in most cases, you would be better off going to a dental professional for teeth whitening for maximum impact. Store-bought whitening products tend to be hit or miss but the right dentist will be able to offer you a strategy for teeth whitening that actually works.More Articles

Copyright © Fooyoh.com All rights reserved.